Running SimpleOpenNI 1.96 and Processing 3.5.4 within Windows 10 with a Kinect 360 camera

Written July 2021

Dr Jennifer Martay, Anglia Ruskin University

After installing all of the software, I used the “Hello World” of Kinect to check everything was running properly. This code was on p 62 of Making Things See by Greg Borenstein, a book I used extensively while learning – I highly recommend it! Paste the below into Processing, click Run, and fingers crossed, you’ll see something like this pop up on your screen too!

import SimpleOpenNI.*;

SimpleOpenNI kinect;

void setup()

{

size(1280,480);

kinect = new SimpleOpenNI(this);

kinect.enableDepth();

kinect.enableRGB();

}

void draw()

{

kinect.update();

PImage depthImage=kinect.depthImage();

PImage rgbImage=kinect.rgbImage();

image(depthImage,0,0);

image(rgbImage,640,0);

}

Find more already-typed out examples of cool stuff here: https://github.com/atduskgreg/Making-Things-See-Examples

Note: All the 2D and 3D point cloud stuff in Making Things See worked well for me. I started running into problems when I started “Working with Skeleton Data” (Ch 4). Newer Processing versions and/or SimpleOpenNI libraries must have different codings?

Here are the changes I needed to make the skeleton calibration work (p 191-192):

firstTrackAndCalibrate

import SimpleOpenNI.*;

SimpleOpenNI kinect;

void setup()

{

size(640,480);

kinect= new SimpleOpenNI(this);

kinect.enableDepth();

//turn on tracking

kinect.enableUser(); //in older versions, this took an argument

}

void draw()

{

kinect.update();

PImage depth=kinect.depthImage();

image(depth,0,0);

//make vector of ints to store users

IntVector userList = new IntVector();

//write list of users

kinect.getUsers(userList);

if (userList.size()>0)

{

int userId = userList.get(0);

if (kinect.isTrackingSkeleton(userId))

{

//make vector for left hand

PVector rightHand = new PVector();

//put position of left hand into vector

float confidence = kinect.getJointPositionSkeleton(userId, SimpleOpenNI.SKEL_LEFT_HAND, rightHand);

//convert detected hand to match depth image

PVector convertedRightHand = new PVector();

kinect.convertRealWorldToProjective(rightHand, convertedRightHand);

//and display it

if(confidence>0.5)

{

fill(255,0,0);

float ellipseSize = map(convertedRightHand.z,700,2500,50,1);

ellipse(convertedRightHand.x, convertedRightHand.y, ellipseSize,ellipseSize);

}

}

}

}

//user tracking callbacks //in older versions all of the callbacks below were different

void onNewUser(SimpleOpenNI curContext,int userId)

{

println("onNewUser - userId: " + userId);

println("\tstart tracking skeleton");

kinect.startTrackingSkeleton(userId);

}

void onLostUser(SimpleOpenNI curContext,int userId)

{

println("onLostUser - userId: " + userId);

}

void onVisibleUser(SimpleOpenNI curContext,int userId)

{

println("onVisibleUser - userId: " + userId);

}

The code on p 247-248 also did not work for me. In this example, different users and features within the Kinect’s view are shown in different colours. The command “kinect.enableScene” is used but appears not to work with Processing 3.5.4. Other people suggested using “kinect.enableUser” instead. This change let the user access the pixels representing people but not other background objects. This isn’t something I was desperate to do anyway so I did not look extensively for updated commands!

Chapter 5 is about 3D scanning. The two programs that are used in this chapter are ModelBuilder v4 and MeshLab 2021.07 which downloaded without any problems. I had to add 2 lines above setup() to get the SimpleDateFormat to work though:

import java.text.SimpleDateFormat; import java.util.Date;

Other Articles:

Three Crystal StructuresA ThreeJS model of three crystal structures |

|

|

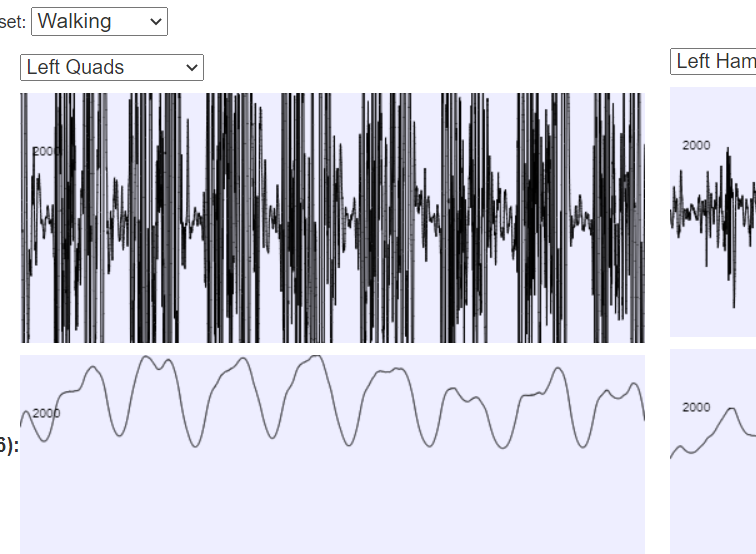

BodyWorks: EMG AnalysisA page with a javascript application where you can interact with EMG data using various filters. |

|

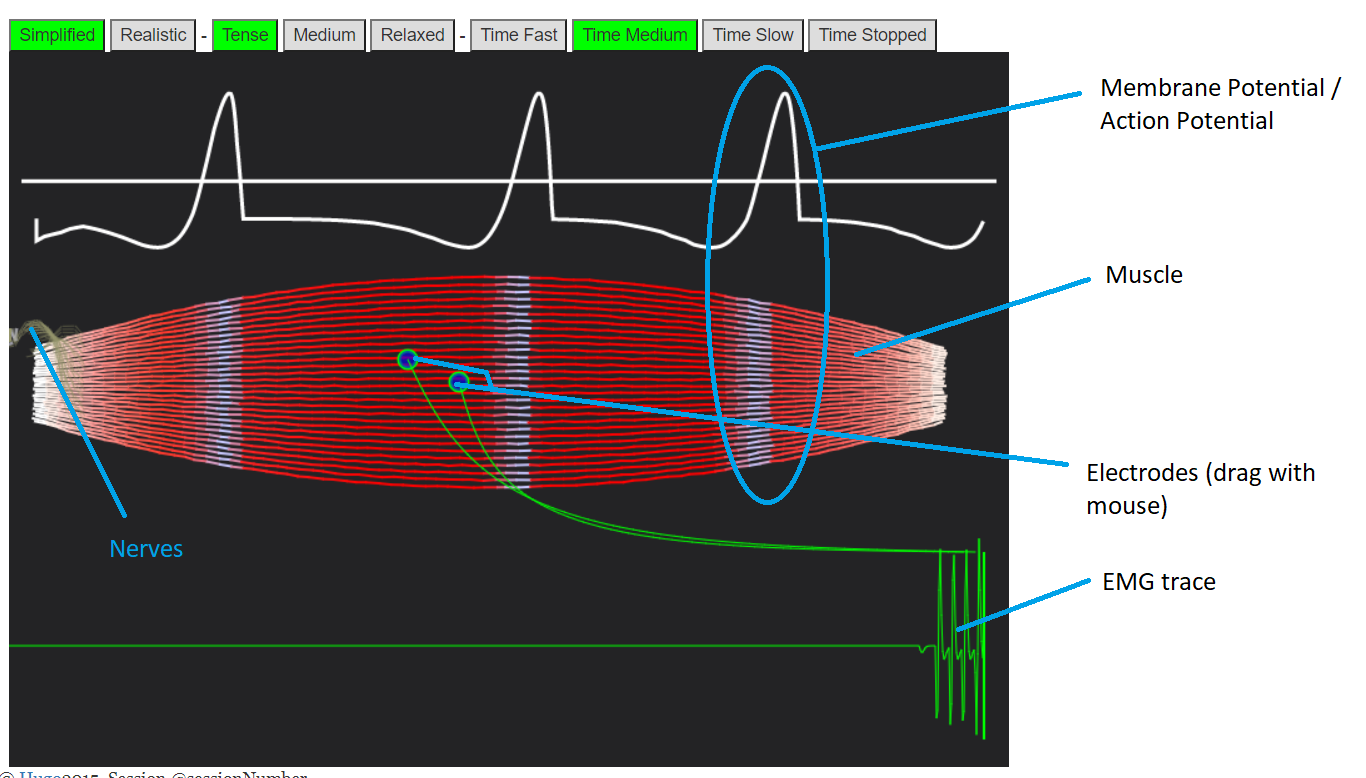

BodyWorks: Neuromuscular Activity / Muscle EMGAn interactive simulation showing how nerves travel to and down muscles, and how this gets picked up by EMG sensors. |